Click on the flyer for additional information.

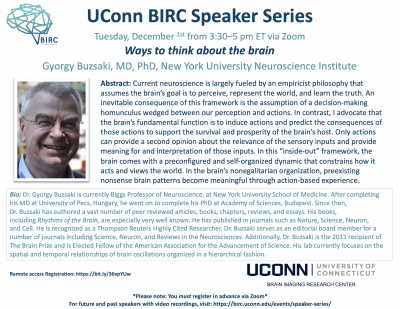

Virtual Talk: Gyorgy Buzsaki, New York University

Ask a Brain Scientist!

In these free sessions, Prof. Fumiko Hoeft will engage with children about the intricacies of the brain. Children (and parents!) will learn about brain science on everyday topics, ask questions they might have, and get a glimpse into how research is done by a scientist.

For kids aged 8-13, but anyone with a child’s heart for learning is welcome!

Each session can stand on its own. When children attend all sessions, they will receive a Junior Neuroscientist certificate.

To register and for more information, please visit Haskinsglobal.org

This program is supported by UConn, UCSF, Haskins Laboratory, Yale University, Made by Dyslexia, and The International Dyslexia Association

NSF Award to Erika Skoe and Emily Myers

Congratulations to Erika Skoe and Emily Myers on their NSF grant Neural predictors of individual differences in speech perception!

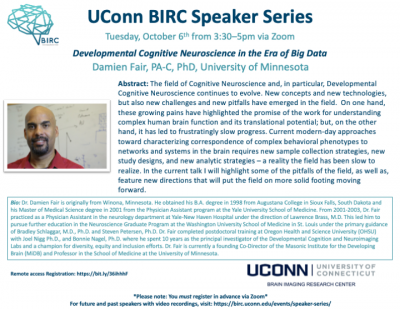

Virtual Talk: Damien Fair, University of Minnesota

NSF Award to Erika Skoe

Congratulations to Erika Skoe on her recently funded NSF project Dual language learning as a training ground for sensory processing!

Second OVPR REP Awarded to BIRC Faculty!

Congratulations to Margaret Briggs-Gowan (PI) and co-PIs Inge-Marie Eigsti, Letitia Naigles, Damion Grasso, Fumiko Hoeft, Carolyn Greene, and Brandon Goldstein on their Research Excellence Program (REP) award for Auditory threat processing in children at-risk for posttraumatic stress disorder! Their project will use ERP and fMRI methods to assess threat reactivity in young at-risk children.

OVPR REP Awarded to BIRC Faculty!

Congratulations to Robert Astur (PI) and Fumiko Hoeft (co-PI) on their Research Excellence Program (REP) award for Using Transcranial Magnetic Stimulation to Reduce Problematic Cannabis Use in Undergraduates! Their project will test whether cravings and real-life use of cannabis can be reduced using TMS in UConn undergraduates who are at risk for cannabis use disorder.

Congratulations to BIRC Seed Grant Recipients

In Spring 2020, BIRC awarded four seed grants, including two student/trainee grants. Congratulations to:

- Postdoc Airey Lau (Psychological Sciences) and faculty supervisors Devin Kearns (Education) and Fumiko Hoeft (Psychological Sciences) for Intervention For Students With Reading And Math Disabilities: The Unique Case Of Comorbidity

- Graduate student Sahil Luthra (Psychological Sciences) and faculty supervisors Emily Myers (Speech, Language & Hearing Sciences) and James Magnuson (Psychological Sciences) for Hemispheric Organization Underlying Models of Speech Sounds and Talkers

- Natalie Shook (PI, Nursing) and Fumiko Hoeft (Co-I, Psychological Sciences) for Identifying Neural Pathways Implicated in Older Adults’ Emotional Well-being

- William Snyder (PIm Linguistics) for Adult processing of late-to-develop syntactic structures: An fMRI study

BIRC provides seed grants to facilitate the future development of external grant applications. Seed grants are provided in the form of a limited number of allocated hours on MRI, EEG and/or TMS equipment at BIRC. These hours are intended to enable investigators to demonstrate feasibility, develop scientific and technical expertise, establish collaborations, and, secondarily, publish in peer-reviewed journals. Seed grants are intended for investigators with experience in the proposed methods, as well as those with little or no experience who have developed a collaborative plan to acquire such experience. New investigators are encouraged to consult with BIRC leadership early in the development of their project. For more information about the program, please visit our seed grant page.

IBRAiN students publish in Nature

Congratulations to two teams of IBRAiN (IBACS-BIRC Research Assistantships in Neuroimaging) graduate students (Yanina Prystauka, Emily Yearling, and Xu Zhang; Charles Davis and Monica Li) on their contribution to a recent study that examined variability in the analysis of neuroimaging data.

The scientific process involves many steps, such as developing a theory, creating hypotheses, collecting data and analyzing the data. Each of these steps can potentially affect the final conclusions, but to what extent? For example, will different researchers reach different conclusions based on the same data and hypotheses? In the Neuroimaging Analysis, Replication and Prediction Study (NARPS) almost 200 researchers (from fields including neuroscience, psychology, statistics, and economics) teamed up to estimate how variable the findings of brain imaging research are as a result of researchers’ choices about how to analyze the data. The project was spearheaded by Dr. Rotem Botvinik-Nezer (formerly a PhD student at Tel Aviv University and now a postdoctoral researcher at Dartmouth College) and her mentor Dr. Tom Schonberg from Tel Aviv University, along with Dr. Russell Poldrack from Stanford University.

First, a brain imaging dataset was collected from 108 participants performing a monetary decision-making task at the Strauss imaging center at Tel Aviv University. The data were then distributed to 70 analysis teams from across the world. Each team independently analyzed the same data, using their standard analysis methods to test the same 9 pre-defined hypotheses. Each of these hypotheses asked whether activity in a particular part of the brain would change in relation to some aspect of the decisions that the participants made, such as how much money they could win or lose on each decision.

The analysis teams were given up to 3 months to analyze the data, after which they reported both final outcomes for the hypotheses as well as detailed information on the way they analyzed the data and intermediate statistical results. The fraction of analysis teams reporting a statistically significant outcome for each hypothesis varied substantially; for 5 of the hypotheses there was substantial disagreement, with 20-40% of the analysis teams reporting a statistically significant result. The other 4 hypotheses showed more consistency across analysis teams. Interestingly, the underlying statistical brain maps were more similar across analysis teams than expected based on the diverse results from the final hypothesis tests. Hence, even very similar intermediate results led to different outcomes across analysis teams. In addition, a meta-analysis (combining data across experiments, or in this case across analysis teams, in order to analyze them together) that was performed on the analysis teams’ intermediate results showed convergence across teams for most hypotheses. The data did not allow testing of all of the factors related to variability, but some aspects of the analysis procedures were found to lead to more or fewer positive results.

A group of leading economists and behavioral finance experts provided the initial impetus for the project and led the prediction market part of the project: Dr. Juergen Huber, Dr. Michael Kirchler and Dr. Felix Holzmeister from the University of Innsbruck, Dr. Anna Dreber and Dr. Magnus Johannesson from the Stockholm School of Economics and Dr. Colin Camerer from the California Institute of Technology. Prediction markets are a tool that provides participants with real money they can then invest in a market – in this case, a market for the outcomes on each of the nine tested scientific hypotheses. Here, they were used to test whether researchers in the field could predict the results. The prediction markets revealed that researchers in the field were over-optimistic regarding the likelihood of significant results, even if they had analyzed the data themselves.

The researchers emphasize the importance of transparency and data and code sharing, and indeed all analyses in this paper are fully reproducible with openly available computer code and data.

The results of NARPS show for the first time that there is considerable variance when the same complex neuroimaging dataset is analyzed with different analysis pipelines to test the same hypotheses. This should certainly raise awareness for members of the neuroimaging research community, as well as for every other field with complex analysis procedures where researchers have to make many choices about how to analyze the data. However, at the same time, the findings that the underlying statistical maps are relatively consistent across groups, and meta-analyses led to more convergent results, suggest ways to improve research.

Importantly, the fact that almost 200 individuals were each willing to put tens or hundreds of hours into such a critical self-assessment demonstrates the strong dedication of scientists in this field to assessing and improving the quality of data analyses.

The scientific community aims constantly to gain knowledge about human behavior and the physical world. Studying such complex processes frequently requires complex methods, big data and complex analyses. The variability in outcomes demonstrated in this study is an inherent part of the complex process of obtaining scientific results, and we must understand it in order to know how to tackle it. As the recent COVID-19 pandemic made clear, even when taking into account the uncertainty inherent to the scientific process, there is no substitute for the self-correcting scientific method to allow the global human society to address the challenges we are facing.

The CT Institute for the Brain and Cognitive Sciences (IBaCS) offers IBACS-BIRC Research Assistantships in Neuroimaging (IBRAiN). After formal training, IBRAiN fellows provide a teaching resource to help BIRC users design and implement experimental procedures for fMRI, EEG, TMS and other methodologies, provide resources for data analysis, and oversee use of equipment by others. Click here for more information about applying to this program.